Written by : Ding Zhengtian, Camille Jouanet, June Bhartia, Max Guilloré, Abdennour Kerboua

Abstract

Welcome to our Quantitative UX Blogpost! Here, you’ll find everything you need to know about our project, including case studies, data collection processes, methodologies, and experimental results. Our focus is on studying an AR game tutorial (Ingress), designing and conducting user experiments, and collecting data to evaluate and improve the game’s user experience.

Ingress is a location-based augmented reality (AR) game developed by Niantic, Inc., originally released in 2013. It transforms the real world into a digital battlefield where players, known as “agents,” interact with real-world landmarks through their mobile devices to capture and control territories.The game is built on Niantic’s AR technology, using GPS to turn physical locations into “portals,” often located at landmarks, public art, and other points of interest. Players capture portals for their faction, link them to form triangular “fields,” and compete for control of territory.

Case-study description

What is it?

Our project focuses on Ingress, a location-based AR game by Niantic that turns real-world landmarks into “portals” for gameplay. Players capture, link, and control these portals using GPS and real-world movement, creating an immersive blend of physical and virtual experiences.

What is it purpose?

- Encourage outdoor exploration and foster collaboration among players.

- Discover new locations.

- Engage in strategic decision-making.

- Work as a team to achieve faction-based goals.

Who is it main audience?

- Mobile gamers

- AR enthusiasts

- individuals who enjoy strategy-driven, team-based gameplay

Why did we choose it?

Through our experience with the game, we found it to be complex and cumbersome for a mobile game, particularly in terms of its world-building and interface guidance. With numerous interactive gameplay options, we wanted to explore whether its tutorials effectively support new players.

Data

Data types

For our study, we used a combination of participant-provided data and system-collected data to analyze user interactions with the Ingress game.

Participant-provided : Participant Questionnaires.

System-collected : Touch Interaction data.

Data sources

- Participant Questionnaires (Qualitative, Quantitative)

- Pre-experiment questionnaire : Gathered demographic and background information.

- Post-experiment questionnaire : Captured participants’ overall impressions of the game.

- Final questionnaire : Collected detailed reflections on the game experience.

- Touch interaction data (Quantitative)

- We used Android Debugging Bridge (ADB) to capture touch event data from participants’ interactions with the game.

- Each recorded event included timestamps, touch positions, and interaction sequences, stored in CSV format for further analysis.

Data Collection & Anonymization

- All data were collected by us, with no external sources involved.

- Touch interaction data and questionnaire responses were anonymized, ensuring that no personally identifiable information was linked to participants.

- Informed consent was obtained before data collection to maintain ethical research standards.

Methods

Experiment script

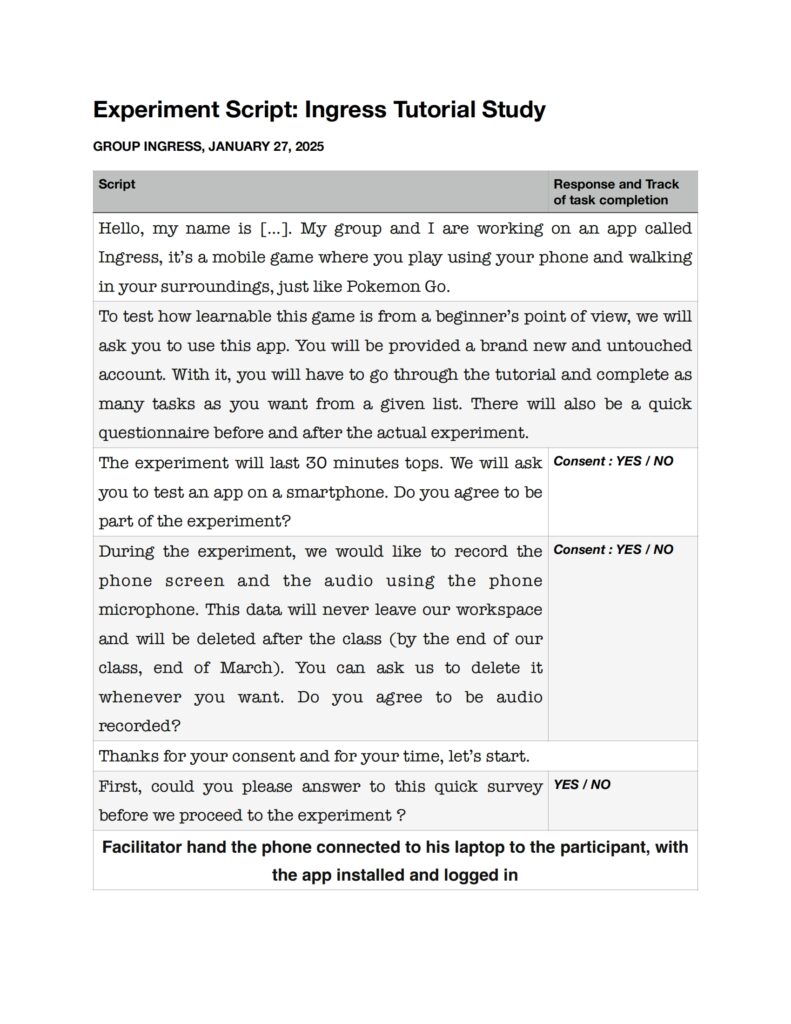

First, we designed the experiment script, which begins with an introduction to the purpose of the study, followed by a series of questions for the participants. We emphasized the importance of protecting user information, ensuring that the experiment was conducted only after obtaining informed consent.

During the experiment, participants completed the questionnaire three times. The first questionnaire, administered before the experiment, collected basic demographic and background information. The second, given at the end of the experiment, allowed participants to express their impressions of the game through a multiple-choice format. Finally, the third questionnaire provided an opportunity for participants to articulate their detailed thoughts and experiences in written form.

Flowchart

Next, to facilitate data collection and analysis, we created a flowchart of the Ingress game, mapping out the possible interfaces resulting from each step of interaction. We then categorized these interfaces into three main groups: the landing page, the main menu, and other miscellaneous screens. This classification provided a structured approach to understanding user interactions within the game.

We use an online annotation tool to annotate and keep track of user’s touch.

Task Completion

When conducting the experiment, we require users to complete two sets of tasks.Before, during, and after completing the two sets of tasks, we conduct surveys with the participants to collect qualitative feedback.

Task #1

The participants will complete the basic tutorial of the game, learning its fundamental concepts and operations.

Task #2

The participants will complete as many tasks as possible within the given assignments, including both simple and complex tasks.

Collecting data (Android Debugging Bridge-ADB)

We collect data by gathering information about the user’s click events on the mobile phone screen and storing it as a CSV file.

We records and replays touchscreen events on Android devices using ADB (Android Debugging Bridge). It captures touch input data with getevent and replays it using sendevent or mysendevent. The tool is useful for automation testing, UI interaction analysis, and touch data collection. Additionally, a Python script converts event logs into a CSV format for easier analysis.

Results

Qualitative Results

Ingress is widely perceived as difficult to understand (68.8%) and learn (87.5%), with all participants (100%) agreeing that it is highly complex. Over half found it demotivating (56.4%) and inefficient (53.3%). These findings indicate that Ingress has a steep learning curve and is overly complicated.

Quantitative Results

Longer tutorial times may lead to increased task difficulty and completion time while reducing task completion rates, suggesting that tutorial complexity may hinder user learning and task performance.

Our analysis of screen flow revealed navigation patterns, showing that users frequently switch between the portal and home screens. Many users return to the home screen instead of the menu, requiring an extra click to navigate back, leading to inefficiencies.

Overall Results

- Ineffective tutorial: All the aspects of gameplay are not explained, and there is an overwhelming amount of information

- Game Mechanics: Overly complicated systems with too little time to master them

- Bad UI Design: Navigation through the menus is tedious and some parts of the game are unnecessarily hidden

Insights and Recommendations

1. Improve Navigation Flow

Optimize transitions between screens to reduce unnecessary clicks, ensuring users can efficiently return to the menu.

2. Simplify Explanations and Add Visuals

Provide clearer explanations with visuals to help users grasp complex concepts more easily.

3. Clarify Action Labels

Modify action names to better distinguish different functionalities and reduce confusion.

4. Redesign the Tutorial

Break down the tutorial into smaller, progressive mini-tutorials to introduce features gradually as users explore the UI.

5. Fix Exit Menu Behavior

Ensure that exiting from a secondary screen redirects users to the main menu instead of the game screen for a smoother user experience.